Input and Output

This course focuses on a specific kind of video games – pseudo-realistic realtime simulations. “Pseudo” meaning that this is not about super realistic flight simulators for the military or anything like that. Most of the time games focus on just appearing realistic rather than actually being realistic. This allows for many optimizations and more often than not makes it possible to run games in realtime in the first place. Applying such optimizations efficiently though requires some knowledge of human recognition mechanisms. On the other extreme, aspiring game designer might be put off by any aspiration for realism at all. However even artist education of all kinds starts out by teaching how to achieve realism, or as Picasso once said: “Learn the rules like a pro, so you can break them like an artist”.

Video games like any computer application take in some sensor data (mostly using pressure sensors – so called buttons), do some calculations and then stimulate the sensors of the human who provided the input data (mostly using monitors and speakers). This is done over and over again, preferably more than 20 times a second. Games with the exception of maybe some strategy games rarely stand still and just wait for events like computer applications usually do. Enemy positions are updated, sound buffers are refilled, the viewport is redrawn - even if the computer does not receive any input events.

Waves

Understanding the sensor technology on both sides (machine and human) is key in making games more immersive. The most important sensors in use when video games are played are arguably eyes and ears. Both of these sensor types are used in pairs to arithmetically add some 3d positioning information to the input data. Also both sensors measure specific kinds of waves.

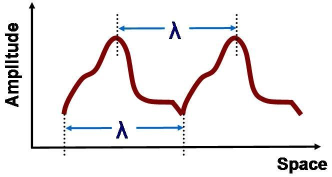

A wave is generally defined by it’s propagation and oscillation directions, it’s amplitude, speed and wavelength. For light and sound waves speed is a mostly constant value. When the actual waveform is of importance it is not sufficient to work on those base values - instead the wave has to be sampled, constructing a time-indexed array of amplitude values.

Eyes and Monitors

The eye is an advanced optical sensor, measuring certain properties of light - electromagnetic, transverse waves with a wavelength between 400 and 700 nanometers. Transverse waves oscillate in a direction which is perpendicular to their direction of propagation. Light waves therefore have five defining properties: A position, a direction of propagation, a direction of oscillation, a wavelength and an intensity. The speed of light can generally be set to a constant value of very very fast. All of this is already only a rough physical approximation that is however currently sufficient for realtime game graphics.

The human eye measures only four of these values, it cannot see the oscillation direction of light. That is not true for all eyes, many other animals can indeed see it. Position and direction of propagation are indirectly measured using a system of lenses – a topic to be discussed further in future chapters about 3D graphics. Light intensity is measured directly by the rod and cone cells which are lots of tiny sensors at the back of the eyeballs (strictly speaking the measured light intensity is not purely the wave amplitude but the energy of the light wave which also depends on it’s frequency). Wavelength is measured only by the cone cells, but instead of measuring the wavelength directly, there are three different kinds of cone cells which are triggered by different wavelengths. Those wavelengths are called red, green and blue (the so-called primary colours). When different cone cells are triggered at the same position, the brain reports an interpolated color in between the measured colors. However this interpolation can fail: When a blue light wave and a red wave end up at the same position in your eye, the blue and red cells will be triggered – the interpolated colour would now be green but the brain also recognizes that the green cell was not triggered. When that happens the brain reports a color that does not actually have a wavelength. That color is magenta. There is no magenta in a rainbow for that very reason. Also this makes color a 2D value. Add intensity and it is a 3D value.

Monitors send light to the eye using a surface plastered with tiny light emitters called pixels. But it is not sufficient for a single pixel to just send out a single, configurable wavelength - because magenta. Instead monitors mirror the design of the human eye. One pixel consists of three subpixels – red, green and blue. Monitors just send out the three wavelengths that trigger human color receptors. That is bad news for the zebra finch, which is one of several species of birds who can perceive four primary colors. Extra-terrestrial life forms will most likely also be confused when they see a color monitor, so please avoid that.

Putting images on a monitor using a computer is really simple. Computers simply manage an array of red/green/blue values at a specific memory address called the framebuffer. This array mimics the structure of the monitor, which is itself an array of red/green/blue values. A little piece of hardware takes care of transferring your array to the monitor at constant intervals – typically 60 times per second. Timing that correctly however can be tricky: Changing the image array during data transfer to the monitor should be avoided – otherwise the monitor will show you a part of the old and a part of the new image at the same time (the old one on top and the new one at the bottom). To avoid that interesting graphics effect the hardware reports on a small time interval during which it is save to change images. That is the vertical blanking interval. In ancient times (aka the eighties and early nineties) games changed their images during that interval. Today computers simply use two framebuffers (a process called double buffering). A new image is constructed in one framebuffer and when finished the hardware in instructed to switch to this framebuffer during the next vblank interval while at that point the program starts drawing to the other framebuffer. It’s kind of sad though when a game runs a little slow and misses a vblank. At that point the game has to wait very long and some gamers prefer to ignore the vblank signal for that reason, instead opting in to regular graphics errors. Games can use three buffers (aka triple buffering) to try to mitigate this problem. Even better, upcoming monitors can use variable refresh rates, adjusting to the needs of the application (see freesync or G-Sync).

Monitors add another complication by not outputting linear light intensities based on the input data. Instead the input data is transformed using a gamma curve, which is a simple exponential function (Output intensity = Input intensity ^ gamma). The exponent of that function is called the gamma value. Monitors usually operate using a gamma value of 2.2. Image data saved on a computer is generally encoded using an inverse gamma value of about 0.45 which cancels out the monitor gamma transformation such making the process of displaying an image simple again. Gamma encoded values are however not ideal for internal lighting calculations for games.

A common case for games would be to do some calculations on textures (images) and output them to the screen. The textures are gamma-corrected for displaying them on a screen. To get them into linear color space, we need to reverse this. This is done by raising the input color value (componentwise for red, green, blue) to the power of gamma = 2.2. Then, we handle the operation in linear space. For outputting the result to the screen, we need to gamma-correct again, this time using a value of gamma = 1/2.2.

Visual Field

Human eyes, as opposed to many animals, are specialized on perceiving depth at the cost of a large field of vision. The human field of vision is ~180 degrees horizontally and ~135 degrees vertically. However, the quality of the visual field is not uniform. For example, we can only perceive depth in ~135 degrees horizontally, since the remaining visual field is not visible to both eyes simultaneously. Also, the cone and rod cells are not uniformly distributed: Cones are mostly in the center of the field of view, leading to good color vision there. Rods are mostly on the periphery of the visual field, leading to good shape perception there.

The fovea centralis is the area roughly at the center of the retina, and is the area of the largest density of cones. Therefore, this is where humans see the most detail. Foveated rendering is an approach to optimizing rendering by only the area that the eyes are focusing on, since the details in the surrounding areas will not be visible to the user.

Depth Perception

The human eyes are separated by the interpupillary distance (IPD), which on average is ~65mm, but differs between individuals. Our brain uses the fact that the two eyes can move and see independently to perceive depth. There are three binocular cues (using both eyes) and a range of monocular cues (for which only one eye is needed). Note that we can, to a certain degree, perceive depth with only one eye. If you close one of your eyes, the world doesn’t suddenly appear to be 2D - this is why.

The three binocular cues we are examining here are:

- Stereopsis: This cue uses the fact that the images from each eye are offset from each other. An object seen in one eye is seen from a slightly different perspective in the other eye. This cue is useful to us for a distance of about 200m, but it depends on the circumstances like the size of the object, the lighting conditions etc.

- Convergence: A vergence is the movement of the eyes to bring an object into focus. The term divergence can be used to describe looking cross-eyed. Our brain can measure the angle of the eyes to estimate how far away an object is. This cue is usable for distances of about 10 meters.

- Shadow Stereopsis: It was shown that viewers perceive stereo images in which the objects themselves have to offset, but their shadows do, to have depth.

Monocular cues are interesting when it comes to computer game history as well as art history, since they have been used successfully to give (animated) images depth. Some examples are:

- Motion Parallax: Objects that are far away appear to be moving slower than objects that are closer, when the viewer is moving relative to them. “Parallax scrolling” was a buzzword used for platform games in the 80’s and 90’s for games which used this effect during their scrolling.

- Lighting and Shading: Shadows and lighting are important informations we get about the relative size, position and surface of objects. Without them, images appear flat and unrealistic.

- Aerial perspective: This cue uses the observation that, in the Earth’s atmosphere, objects that are far away from the viewer (such as mountains on the horizon) approach the color of the horizon. In former days, especially early 3D and console games had to make use of very dense fog, which can be seen as very intense aerial perspective for optimization, since they could not render enough objects at arbitrary distances.

- Texture gradient: We know that uniform textures that recede into the distance become more packed. Imagine you are standing next to a brick wall and see it recede into the distance: The bricks become ever smaller and you can see more in the same space in your vision field.

Ears and Speakers

Sound is made up of longitudinal (oscillation and propagation direction are identical) air pressure waves. Ears and speakers work like drums – thin, oscillating surfaces. The speaker oscillates based on the data it receives, the ear sends data to the brain based on how it oscillates. This is an interesting difference to how monitors work: Concrete wave forms are constructed directly, which is an approach that is much more physically correct. But therefore audio is much more timing sensitive. At the software side the timing problem is reduced using small ringbuffers that have a read position for the sound hardware and a write position for the software. The software should take good care to always stay on top of the read position to avoid very unpleasant sounds.

A sound saved in a computer is a one-dimensional array of wave intensity values (a sampled wave). To play the sound it has to be copied to the ringbuffer starting at the current write position. The ringbuffer though can easily be smaller than the sound to be played. For that reason sounds are copied to the buffer in little pieces, making note of the playing position of every sound for the next iteration of the mixing loop. To play multiple sounds at the same time they have to be mixed. Sound mixing is directly based on what happens when waves interact with each other - waves are just added together when they interact. This regularly leads to an unwanted effect: When two identical sounds are played at the same time (say two explosion sounds), it will sound like just one sound which is very loud. This doesn’t happen so much outside of virtual worlds because two real world sounds rarely are exactly identical. The problem can be avoided by adding variations of the same sound for sounds that are likely to play more than once at the same time.

Other Human Sensors

Humans contain many more sensors than just eyes and ears but up to now games focus very much on those two. Some input devices contain small motors to trigger human pressure sensors. The effects of this can be significant but it really is a very simple feature - it’s usually a single value that controls the amount of force that is applied to shake your hands. Arcade games sometimes managed to trigger additional sensors, but that is pretty much a dead market segment by now.

Buttons and Fingers

Keyboards, joypads, mice and touchpads – really simple input devices which however are very fast and reliable. Also, they are attached to the fingers, which are also quite exact and are capable of transferring surprisingly high data rates. They generate just a small bunch of values which generally should be checked every frame, because they tend to change very fast.

Other Computer Sensors

More complex sensors are only rarely used for games but most notably there have been some mildly successful camera based games (EyeToy, Kinect) and some very successful acceleration sensor based games (Wii). Compared to buttons those sensors are generally much less reliable – they do not return exact values and some add noticeable amounts of delay. Also they primarily measure limb movements, which are much slower and less exact than finger movements. More often than not game designs have to be adjusted to compensate for the inaccuracies.